AI in Criminal Justice: Can Machines Be Just?

31 August 2025

Artificial Intelligence (AI) is transforming industries across the board, and the criminal justice system is no exception. From predictive policing to risk assessment tools used in sentencing and parole decisions, AI is already playing a significant role in how we manage crime and justice. But here's the kicker: Can machines truly be just? Can we trust an algorithm to make decisions that affect human lives?

In this article, we’re going to dive deep into the fascinating (and sometimes controversial) role AI is playing in criminal justice. We'll unpack the benefits and risks, explore the ethical concerns, and ask the big question of whether machines can deliver true justice.

The Rise of AI in Criminal Justice

It’s no secret that AI is changing the game in many industries, but in the realm of criminal justice, it’s making waves like never before. In the past decade, we’ve seen AI tools deployed in areas like:- Predictive policing – where algorithms predict where crimes are likely to occur.

- Risk assessment – tools used to assess the likelihood of a defendant committing another crime.

- Facial recognition – used to identify suspects from surveillance footage.

These advancements are designed to make the system more efficient. After all, humans are prone to bias, fatigue, and sometimes just plain error. Machines, on the other hand, are supposed to be objective, consistent, and efficient. But is that really the case?

Predictive Policing: A Crystal Ball or a Dangerous Tool?

Predictive policing is one of the most talked-about applications of AI in criminal justice. Think of it as a high-tech crystal ball that tries to predict where crimes are likely to happen. Law enforcement agencies feed crime data into an algorithm, and voilà – the system makes predictions about crime hotspots. This allows police to allocate resources more efficiently and, in theory, prevent crime before it happens.Sounds great, right?

Well, not so fast. While predictive policing may sound like a sci-fi dream come true, it has its fair share of complications. For starters, it relies on historical crime data. If the data itself is biased (spoiler: it often is), then the predictions will be biased too. For example, if certain neighborhoods have been over-policed in the past, the algorithm may flag those areas as high-risk, even if the residents aren’t more likely to commit crimes.

In other words, garbage in, garbage out. If the data is flawed, so are the predictions.

Risk Assessment: A Fair Judge or a Flawed System?

Risk assessment tools are another major area where AI has made its mark in criminal justice. These tools are used to evaluate the risk of a defendant committing another crime or failing to appear in court. Judges use this information to make decisions about bail, sentencing, and parole.On paper, this sounds like a good idea. After all, who wouldn’t want a more objective way to make these critical decisions? However, these tools have come under scrutiny for perpetuating racial and socioeconomic biases.

Take, for instance, the widely used COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) tool. Studies have shown that COMPAS tends to overestimate the risk of recidivism for Black defendants compared to white defendants. This raises serious concerns about whether these tools are truly fair and just.

So, can machines be trusted to make life-altering decisions? That’s still up for debate.

The Ethical Dilemmas of AI in Criminal Justice

Here’s where things get tricky. The use of AI in criminal justice raises a host of ethical questions that we can’t ignore. Let’s break down some of the key concerns:1. Bias in Algorithms

One of the biggest ethical concerns is that AI systems can inherit the biases of the data they’re trained on. If historical data reflects racial or gender biases, the AI system may perpetuate or even amplify those biases. For example, if a particular group has been disproportionately targeted by law enforcement in the past, an AI system might predict that members of that group are more likely to commit crimes, even if that’s not true.

This is where the question of algorithmic justice comes in. Can machines really be fair if they’re trained on biased data? Or are we just encoding our human prejudices into software?

2. Transparency and Accountability

Another issue is the lack of transparency in how these AI systems make decisions. Algorithms are often described as “black boxes” – we can see the inputs and the outputs, but we don’t always understand what happens in between. This lack of transparency makes it difficult to hold anyone accountable when things go wrong.If a judge makes a bad decision, they can be questioned or even removed from the bench. But who do we hold accountable if an algorithm makes a bad decision? The company that developed the software? The government agency that implemented it?

3. Privacy Concerns

AI systems often rely on large amounts of data, which can raise serious privacy concerns. For example, predictive policing systems typically use surveillance footage, social media activity, and other personal data to make their predictions. This can lead to a situation where people are being watched and judged by machines without their knowledge or consent.Is it fair to use someone’s personal data to predict their likelihood of committing a crime? And how much data is too much?

Can AI Truly Be Just?

So, where does this leave us? Can machines truly be just? Well, it depends on how you define "justice."If justice means fairness, transparency, and accountability, then AI still has a long way to go. While AI has the potential to make the criminal justice system more efficient, it also has the potential to make it more biased and opaque.

The Role of Human Oversight

One thing is clear: AI should never be used as a substitute for human judgment. Instead, it should be a tool to assist human decision-makers. Judges, police officers, and other officials should be trained to understand the limitations of AI systems and use them as one piece of the puzzle, rather than the whole picture.Human oversight is crucial to ensuring that AI systems are used fairly and ethically. After all, justice isn’t just about efficiency – it’s about making sure that everyone is treated fairly and that their rights are protected.

The Future of AI in Criminal Justice

Looking ahead, the use of AI in criminal justice is only going to grow. But as it does, we need to be mindful of the risks and challenges that come with it. Here are a few things to consider as we move forward:1. Improving Transparency

One of the most important steps we can take is to improve the transparency of AI systems. This means making it clear how these systems work, what data they’re using, and how they’re making decisions. Without this transparency, it’s impossible to hold anyone accountable when things go wrong.2. Addressing Bias

We also need to find ways to address bias in AI systems. This could involve using more diverse training data, regularly auditing AI systems to check for bias, and involving a wider range of stakeholders in the development process.3. Strengthening Human Oversight

Finally, we need to ensure that AI systems are always used in conjunction with human oversight. Machines can be incredibly powerful tools, but they should never be the sole decision-makers when it comes to matters of justice.Conclusion: A Double-Edged Sword

AI in criminal justice is a double-edged sword. On one hand, it has the potential to make the system more efficient, more consistent, and less biased (in theory). On the other hand, it can also introduce new forms of bias and raise serious ethical and privacy concerns.At the end of the day, machines are only as just as the people who build and use them. So, can machines be just? The answer is... maybe. But only if we approach the technology with caution, transparency, and a commitment to fairness.

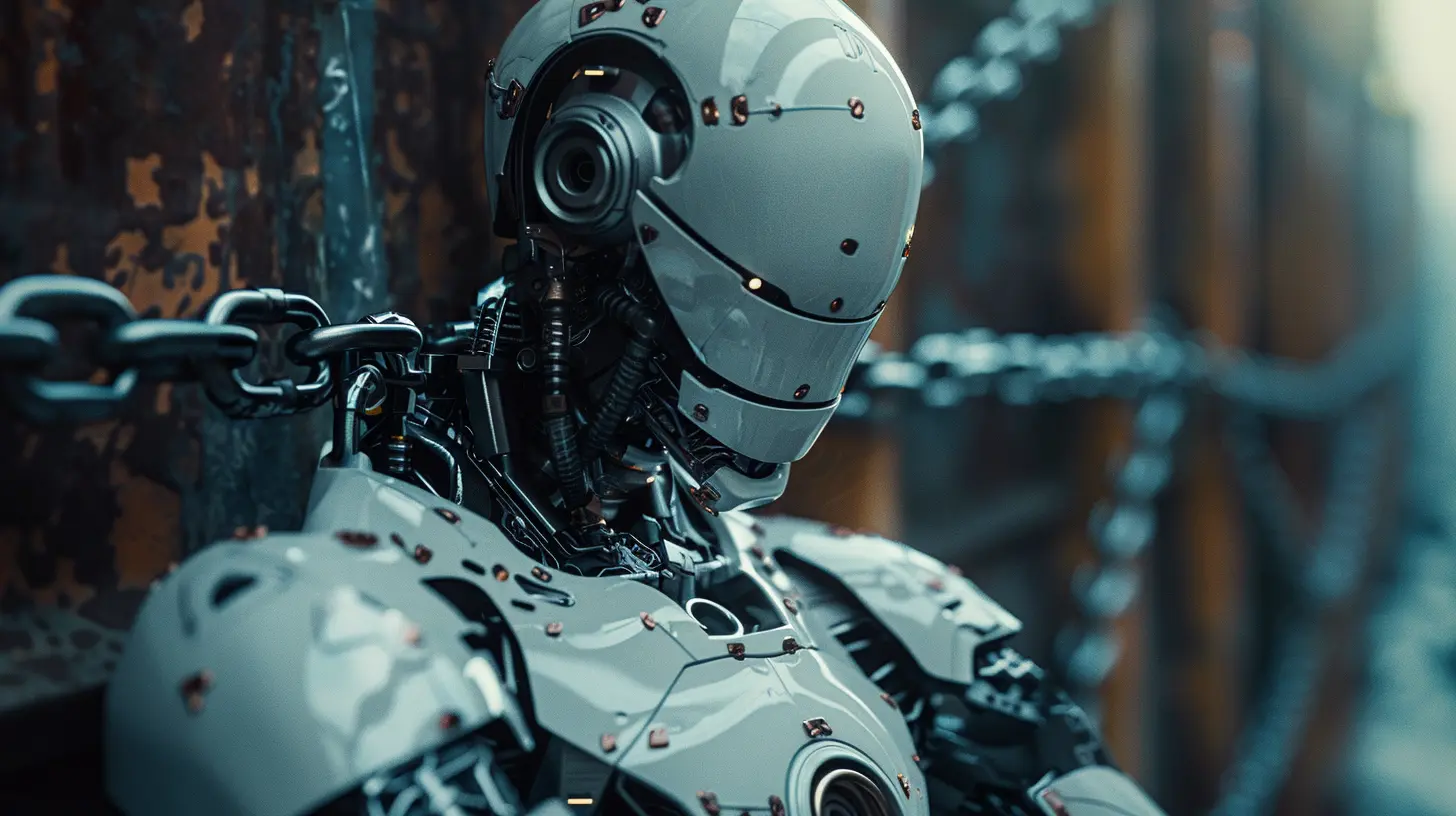

all images in this post were generated using AI tools

Category:

Ai EthicsAuthor:

Ugo Coleman

Discussion

rate this article

1 comments

Kian Lee

While AI holds promise in enhancing efficiency within the criminal justice system, we must remain vigilant. Technology's inherent biases can exacerbate existing inequalities. True justice demands a human touch; machines should assist, not replace, our ethical responsibility to uphold fairness.

September 2, 2025 at 3:41 AM

Ugo Coleman

Thank you for your insightful comment. I completely agree—while AI can improve efficiency, we must prioritize ethical considerations and ensure human judgment remains central in the pursuit of true justice.