AI in Policing: Ethical Implications of Predictive Algorithms

19 March 2025

As technology continues to advance at breakneck speed, it's no surprise that Artificial Intelligence (AI) is making its way into nearly every corner of society — and policing is no exception. AI-driven tools and predictive algorithms are increasingly being used in law enforcement to help identify potential criminal activities, profile suspects, and even allocate resources more effectively. Sounds like something out of a sci-fi movie, right? But here's the thing: while the idea of AI in policing might seem like a technological leap forward, it brings with it a whole host of complicated ethical dilemmas.

So, what's the deal with AI in policing? Why are people talking about it? And, the big question: Is it a good or bad thing for society? Let’s dive deep into the ethical implications of predictive algorithms in law enforcement and see where the lines get a little blurry.

What Is AI in Policing?

Before we get into the nitty-gritty of the ethical concerns, let's first define what AI in policing actually means. When we talk about AI in this context, we're referring to the use of machine learning algorithms and advanced data analytics to assist police forces. These systems analyze vast amounts of data — everything from crime statistics and social media activity to facial recognition and neighborhood demographics — to make predictions about where crimes might occur, who might commit them, or even which areas need more police presence.Sounds helpful, right? Imagine a system that could predict a crime before it happens, like a real-life version of Minority Report. But AI in policing isn't that simple. These systems don’t just sit there in a vacuum; they actually influence decisions that directly affect people's lives, and that's where things get ethically sticky.

The Promise of Predictive Policing

Let’s be real for a second. The idea behind predictive policing is kind of genius. In theory, AI can help law enforcement be more efficient. Instead of just reacting to crimes after they happen, police can preemptively focus their efforts on high-risk areas or individuals, potentially stopping crimes before they even occur. It’s like having a crystal ball that tells you where to look.Crime Prediction

One of the most hyped aspects of AI in policing is predictive crime mapping. Algorithms analyze historical crime data and identify patterns that suggest where future crimes might occur. Think of it like weather forecasting, but for crime. If certain kinds of crimes tend to spike in one neighborhood at a particular time, AI can flag those areas for increased patrols.Resource Allocation

AI can also help police departments allocate resources more effectively. Instead of spreading officers thin across a city, predictive algorithms can help departments strategically deploy their forces to areas where they’re most needed. In a world where police budgets are often stretched thin, this could be a game-changer.Identifying Potential Criminals

Some AI systems go even further by attempting to predict individuals who may commit crimes based on their past behavior and interactions with the justice system. This is where things start feeling a little like Big Brother is watching — more on that in a bit.

The Ethical Concerns

Okay, now that we understand the potential benefits, it’s time to talk about the elephant in the room: the ethical issues. Because while AI in policing sounds good on paper, in practice, things can get a little murky.Bias in the Data

One of the biggest concerns with AI in policing is algorithmic bias. AI is only as good as the data it's fed. If the data used to train these algorithms is biased — and spoiler alert, it often is — the AI will produce biased outcomes. For example, if historical crime data shows more arrests in certain minority neighborhoods, the algorithm may conclude that these areas are inherently more prone to crime, leading to more aggressive policing in those communities.It’s a vicious cycle. The police focus more on certain neighborhoods, leading to more arrests, which then feeds back into the AI system, reinforcing the idea that those neighborhoods are high-crime areas. This creates a feedback loop that disproportionately affects minority communities and perpetuates systemic discrimination.

Transparency and Accountability

Another major ethical concern is the lack of transparency in how these algorithms work. Many AI tools used in law enforcement are proprietary, meaning the companies that develop them don’t always disclose how the algorithms make their decisions. This lack of transparency raises serious questions about accountability. If an AI system flags someone as a potential criminal, but no one knows how that decision was made, who’s responsible? The police? The AI developers? The algorithm itself?And let’s not forget about the public’s right to understand how these systems are impacting their lives. If you’re being disproportionately targeted by predictive policing software, shouldn’t you have the right to know why?

Privacy Invasion

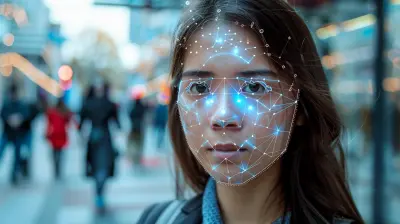

AI in policing often involves the use of data from a variety of sources, including social media, CCTV footage, and even facial recognition technology. While this can help law enforcement solve crimes, it also raises serious privacy concerns. How much surveillance is too much? And where do we draw the line between using technology for public safety and violating individuals' rights to privacy?Facial recognition, in particular, has been widely criticized for its potential to infringe on civil liberties. There have been numerous instances where facial recognition systems have misidentified individuals, leading to wrongful arrests. Even more concerning is the fact that these errors are more likely to occur with people of color, highlighting yet another layer of bias in AI-driven policing.

Pre-crime and Profiling

Here’s where things get downright dystopian. Some AI systems are being designed to predict not just where crimes will happen, but who is likely to commit them — before they actually do anything wrong. This raises all sorts of ethical red flags. Should someone be flagged as a potential criminal based solely on an algorithm’s prediction, even if they haven’t actually done anything illegal? That sounds a lot like punishing someone for a crime they haven’t committed yet.Profiling individuals based on AI predictions can easily lead to over-policing certain groups, particularly minorities, the mentally ill, or people from lower socioeconomic backgrounds. This not only undermines the principle of innocent until proven guilty, but it also risks stigmatizing whole communities.

The Role of Human Judgment

One argument in favor of AI in policing is that these systems are meant to assist, not replace, human decision-making. In theory, police officers can use AI predictions as one tool in their toolbox, but still exercise their own judgment. That sounds reasonable, but in practice, there’s a risk that officers might rely too heavily on the algorithms, treating their predictions as fact rather than guidance.After all, algorithms can seem more objective or impartial than humans. But as we’ve already discussed, they can be just as biased as the data they’re trained on. If police officers start to trust AI predictions without questioning them, we could end up in a world where human judgment takes a backseat, and AI-driven biases shape how law enforcement operates.

Potential Solutions and Safeguards

So, where do we go from here? AI in policing isn't going to disappear anytime soon, but there are ways we can mitigate some of the ethical concerns.Auditing and Accountability

First things first, we need more transparency. AI systems used in law enforcement should be subject to rigorous audits to ensure they aren't perpetuating bias or making unfair predictions. This could involve opening up the algorithms to independent review or creating industry standards for transparency and accountability.Data Quality and Diversity

Improving the quality and diversity of the data used to train these algorithms is also crucial. If AI systems are going to be used in policing, they need to be trained on datasets that are representative of the entire population, not just certain groups. This could help reduce some of the biases that currently plague predictive algorithms.Human Oversight

While AI can be a useful tool, it should never replace human judgment. Police officers need to be trained to use AI responsibly, understanding that it’s just one piece of the puzzle. Ultimately, humans should always have the final say in law enforcement decisions, not machines.Legal Frameworks

Finally, we need stronger legal frameworks to protect individuals’ rights in the age of AI-driven policing. This could involve updating privacy laws to address the use of surveillance technologies or creating new regulations specifically designed to govern the use of AI in law enforcement.

Conclusion

AI in policing holds incredible potential, but it also raises serious ethical concerns that we can’t afford to ignore. From biased algorithms to privacy invasion, the risks are real, and if we’re not careful, we could end up with a system that perpetuates inequality rather than promoting justice. While technology can certainly make law enforcement more efficient, we need to ensure that it doesn’t come at the cost of fairness, accountability, and human rights.The bottom line? AI can be a powerful ally in the fight against crime, but only if we handle it responsibly. And that means asking the tough questions, addressing the ethical implications head-on, and never losing sight of the fact that, at the end of the day, policing is about protecting people — all people.

all images in this post were generated using AI tools

Category:

Ai EthicsAuthor:

Ugo Coleman

Discussion

rate this article

9 comments

Zorina Kirk

This article raises intriguing questions about the intersection of AI and ethics in policing. I'm curious to see how predictive algorithms can enhance public safety while ensuring transparency and fairness. Balancing innovation with accountability is essential for a just society.

April 8, 2025 at 3:19 AM

Ugo Coleman

Thank you for your insightful comment! Balancing innovation and accountability is indeed crucial as we explore the ethical implications of AI in policing. Your curiosity about transparency and fairness highlights key concerns that must be addressed.

Nadia Martin

AI in policing? Let's hope it doesn’t start predicting what’s for dinner next!" 🍕🤖

April 6, 2025 at 11:46 AM

Ugo Coleman

While AI in policing aims to enhance safety, it's crucial to ensure ethical guidelines prevent misuse, including trivializing its purpose. Predictive algorithms should focus on public safety, not personal choices.

Selene Lawson

Critical insights needed on bias risks.

April 6, 2025 at 4:52 AM

Ugo Coleman

Thank you for your comment! Bias in predictive algorithms can lead to unfair targeting and reinforce existing disparities. Critical assessment of data sources, algorithm design, and ongoing monitoring is essential to mitigate these risks.

Phoebe Castillo

Ah, yes, let's hand over the keys to our justice system to algorithms! What could possibly go wrong? I'm sure they’ll be completely unbiased and won’t confuse a cat for a criminal. Sounds foolproof!

April 2, 2025 at 3:03 AM

Ugo Coleman

Your concerns are valid. It's crucial to address bias in algorithms and ensure accountability in AI to safeguard justice.

Patience Hernandez

This article raises important considerations about the ethical implications of using AI in policing. Balancing innovation with civil liberties is essential. I appreciate the insights shared and look forward to ongoing discussions on responsible AI deployment in law enforcement.

April 1, 2025 at 6:56 PM

Ugo Coleman

Thank you for your thoughtful comment! I agree that balancing innovation with civil liberties is crucial in the discussion of AI in policing. I'm glad you found the insights valuable, and I look forward to further dialogue on this important topic.

Selena Griffin

This article raises crucial points about the ethical implications of AI in policing. It's essential to balance innovation with accountability, ensuring technology serves justice without compromising our values or communities.

March 28, 2025 at 1:06 PM

Ugo Coleman

Thank you for your insightful comment! Balancing innovation and accountability is indeed vital in ensuring that AI serves justice while upholding our core values.

Kyle Rivera

Predictive algorithms: science fiction or a civil rights nightmare?

March 21, 2025 at 8:27 PM

Ugo Coleman

Predictive algorithms in policing can enhance public safety but pose significant ethical risks, including biases and privacy concerns. It's essential to balance innovation with accountability to avoid a civil rights nightmare.

Megan Turner

The integration of predictive algorithms in policing raises significant ethical concerns, including bias, accountability, and transparency. Striking a balance between technological advancement and civil liberties is crucial to ensure equitable law enforcement practices.

March 21, 2025 at 5:29 AM

Ugo Coleman

Thank you for your insightful comment! Balancing technological progress with ethical considerations is indeed essential for fostering equitable policing practices. Your points on bias, accountability, and transparency are crucial in this ongoing discussion.

Leah Shaffer

Predictive algorithms in policing: tech's way of turning justice into a guessing game—yikes!

March 19, 2025 at 8:19 PM

Ugo Coleman

Thank you for your comment! It's crucial to recognize that while predictive algorithms can enhance policing, they also raise significant ethical concerns that must be addressed to ensure justice is served fairly.

MORE POSTS

The Importance of Cybersecurity in Home Automation

The Rise of 6G: What to Expect from the Next Generation of Wireless

How Machine Learning is Enhancing Human-Computer Interaction

How Machine Learning is Taking Predictive Analytics to the Next Level

How Machine Learning is Enhancing Facial Recognition Technology

Machine Learning and Robotics: A Match Made for the Future

AR in the Gaming Industry: Beyond Entertainment