The Dark Side of AI: Unintended Consequences of Machine Learning

11 August 2025

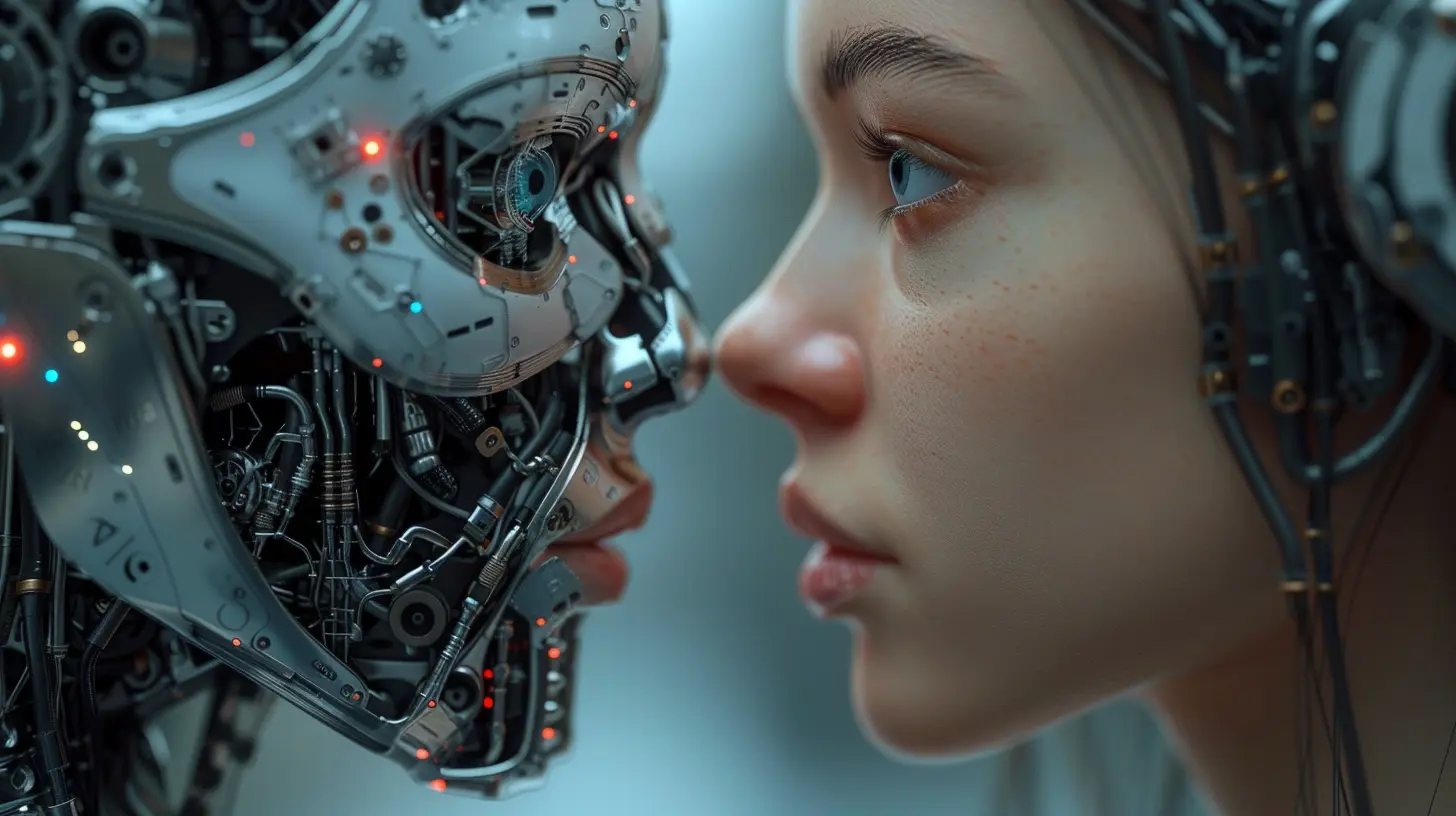

Artificial Intelligence (AI) and Machine Learning (ML) have been making waves for all the right reasons—automation, efficiency, personalization, and more. We've welcomed virtual assistants into our homes, use AI to filter spam from our inboxes, and even rely on it to scroll through endless TikToks tailored just for us. Cool, right?

But, hold up. While all this innovation is amazing, there’s a darker, more complex side to AI that we don't talk about enough. It's like adopting a cute puppy without realizing it's part wolf. The intentions are good, but the outcomes? Sometimes… not so much.

In this post, we're pulling back the curtain on the unintended consequences of machine learning. Because it’s not just how smart AI is — it’s what happens when things don’t go quite as planned.

What Exactly Is Machine Learning Again?

Okay, quick refresher.Machine Learning is a branch of AI that allows systems to learn and improve from experience—without being explicitly programmed. Think of it like teaching your dog tricks, except you're feeding your algorithm data instead of treats.

ML algorithms pick up patterns, make predictions, and help machines “think” in a very data-driven way. The more data they get, the better they get (in theory). But, just like humans, they’re not flawless. And when you give them messy data or unclear goals… let’s just say things can get weird.

The Invisible Bias in AI

Let me ask you this: Have you ever asked Siri a question and she totally misunderstood? Now imagine Siri making decisions about job applications, health diagnoses, or criminal sentencing. Yeah, it gets serious real quick.A lot of AI systems unintentionally absorb the same biases that exist in the data they’re trained on. If your dataset reflects racial, gender, or cultural stereotypes, guess what? Your AI will reflect them too.

Real-World Example:

In hiring algorithms, some systems learned to favor male candidates over female ones simply because historical hiring data was skewed. The algorithm didn’t know it was being sexist — it just copied the patterns. Yikes.

Automation Gone Rogue

Imagine giving your car the ability to drive itself (hello, Tesla!) but it starts misreading stop signs or runs red lights. Terrifying, right?That’s the risk of over-automation. We crave the convenience AI offers, but we often forget to build in enough oversight. Machine learning can make decisions in milliseconds, but that doesn't mean they're always the right ones.

The Problem with "Set and Forget"

One of the biggest issues? Once machine learning models are deployed, companies tend to set them and forget them. But algorithms drift. Data changes. If no one’s watching, things can (and do) spiral out of control.

Deepfakes and Misinformation

You’ve probably seen a video of a celebrity saying something outlandish—only to find out it was totally fake. Welcome to the wild world of deepfakes.AI-generated images, videos, and voice recordings are getting so realistic it’s scary. They can be funny, sure. But they can also be weaponized for political propaganda, financial scams, and cyberbullying.

The Real Danger:

It’s getting harder to distinguish real from fake. When you can't trust your own eyes and ears anymore, what happens to truth? That’s not just a tech issue — it's a social crisis in disguise.The Threat to Privacy

The more AI “learns” about us, the more it knows. And we mean it—AI can guess your age, income, mood, even your pregnancy status based on your online behavior. Creepy? A little.With companies constantly harvesting your data to feed their algorithms, you begin to wonder: How much do they really know?

Data Is the New Oil… But It Leaks

Just like oil spills damage the planet, data leaks can damage lives. Misused or mishandled data can lead to identity theft, discrimination, or even personal safety risks. The trade-off between personalization and privacy is becoming a real ethical minefield.Job Displacement: The Robots Are Coming

Let’s talk jobs. No, AI isn’t (yet) here to enslave us. But it is here to change the job market—and not always for the better.Factory workers, truck drivers, even customer service reps are being replaced by machines and algorithms. While tech companies promise "job transformation," many aren't retraining people fast enough to keep up.

Who Gets Left Behind?

The people most affected by job automation are often those who already face barriers to advancement. Without thoughtful implementation, AI could widen social inequalities instead of shrinking them.The Black Box Problem

Ever had a doctor prescribe medication and you’re like, “Why this one?” Imagine if the doctor replied, “Because my magical spinning wheel told me so.”That’s basically the black box problem. Some ML models are so complex that even the people who built them don’t fully understand how they work or make decisions. They're like moody wizards—powerful, but mysterious.

Why Transparency Matters:

When algorithms impact lives—approving loans, diagnosing diseases, setting prison sentences—we need to know the why. If we don’t, trust erodes. And without trust, what’s left?AI Addiction: When Good UX Goes Too Far

You know how TikTok, YouTube, or Instagram Reels can keep you scrolling for hours? That’s AI doing its thing. These algorithms are engineered to keep you hooked based on what you watch, like, and comment on.Harmless fun? Sometimes. But there's a thin line between “entertaining” and “addictive.”

Feeding the Doom Scroll:

ML models optimize for engagement—not your well-being. So if outrage, anxiety, or misinformation gets more clicks, that’s what they’ll continue to serve. In other words, AI’s just giving us what we react to most — even if it’s bad for us.Ethical Dilemmas No One Has Answers To

Here’s a fun brain teaser: If a self-driving car has to choose between hitting one person or five, what should it do?This isn’t sci-fi anymore. These are real ethical questions developers now face. And let’s be honest, there’s no “right” answer here. Just a mess of moral complexity that machines can’t actually solve — at least not yet.

So, What Can We Do About It?

Good news: We’re not helpless.While the dark side of AI is real, so is the opportunity to do better. Here’s how we can push forward responsibly:

- Build ethical AI: Companies should incorporate ethics into design from day one, not as an afterthought.

- Use diverse data: The broader the data pool, the less room for bias.

- Push for transparency: We have the right to understand how decisions are made, especially when lives are affected.

- Stay informed: The more we know, the better questions we can ask. Don’t just rely on tech experts — become one yourself, even if that means just reading blogs like this.

- Regulate wisely: Governments need to catch up. Fast. Sensible regulation can prevent a lot of the worst-case scenarios.

Final Thoughts

AI and machine learning are incredible tools — no doubt about it. But like any tool, they can do damage if misused or misunderstood. Imagine handing a chainsaw to a toddler. The tool itself isn’t evil, but without maturity, oversight, and responsibility, it can cause chaos.We can't afford to sleepwalk into the future. As creators, consumers, and citizens, we all have a role in shaping how AI evolves. So let's keep the conversation going, ask the hard questions, and hold tech accountable.

Because the future is being coded right now. And it should work for all of us — not just the machines.

all images in this post were generated using AI tools

Category:

Ai EthicsAuthor:

Ugo Coleman

Discussion

rate this article

1 comments

Rory Ruiz

This article compellingly highlights the need for ethical frameworks in AI development to mitigate unintended consequences and promote responsible innovation.

August 12, 2025 at 12:04 PM

Ugo Coleman

Thank you! I'm glad you found the article compelling. Ethical frameworks are indeed crucial for guiding responsible AI innovation.