The Ethics of AI in Emotional Manipulation: Can Machines Manipulate Us?

22 May 2025

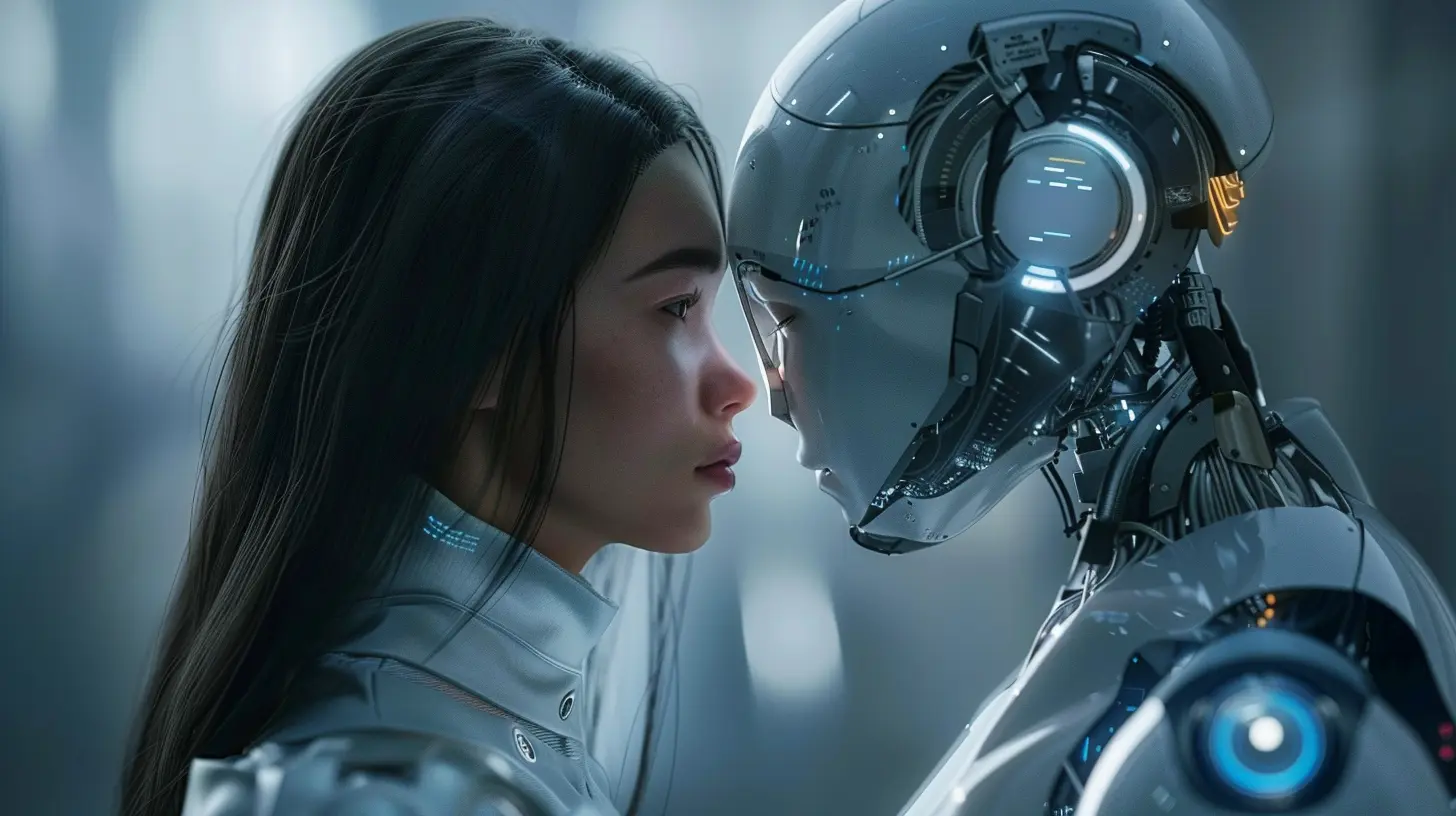

Artificial intelligence (AI) has been making waves in almost every aspect of our lives, from virtual assistants to self-driving cars. But one area that raises serious ethical concerns is AI’s ability to manipulate human emotions.

Think about it—have you ever watched a YouTube video, only to find yourself clicking on another... then another? Before you know it, an hour has passed, and you're deep into a rabbit hole you never intended to explore. That’s AI working behind the scenes, using algorithms to keep you engaged. But what happens when AI crosses the line from influencing to manipulating our emotions deliberately? Let’s dive in.

🎭 What Is Emotional Manipulation in AI?

Emotional manipulation is when one party influences another’s emotions to guide their decisions—sometimes ethically, sometimes not. People do it all the time (think of advertisers, salespeople, or even politicians), but when machines take over this role, things get a little… unsettling.AI can analyze massive amounts of data—including our past behavior, preferences, and emotional responses—to predict and influence our actions. From personalized ads to emotionally charged chatbots, the goal is often to keep us engaged, make us spend money, or even shape our opinions.

But where do we draw the line between ethical persuasion and unethical manipulation?

🤖 AI’s Toolkit: How Machines Influence Our Emotions

AI doesn’t have feelings (at least, not yet), but it’s remarkably good at reading and influencing ours. Here’s how:1. Personalized Content Feeds

Ever noticed how your social media feed seems to "know" what you're into? That’s AI at work, analyzing your likes, comments, and watch time to serve up content designed to keep you scrolling. While this helps deliver content you enjoy, it can also keep you trapped in an echo chamber.2. Chatbots That Mimic Empathy

Customer service chatbots are becoming increasingly sophisticated. Some are programmed to respond with sympathy—saying things like “I’m so sorry you’re having this issue!” even though they don’t actually feel anything. It’s a great customer service tactic, but it can also be misleading, making people trust AI in ways they shouldn’t.3. Deepfake Videos & AI-Generated Voices

Imagine getting a phone call from what sounds exactly like your boss, telling you to transfer money immediately. That’s not a sci-fi plot—it’s happening right now. AI-driven deepfake technology can clone voices and faces with alarming accuracy, making emotional manipulation a serious threat.4. AI Recommenders & Nudging Behavior

Have you ever been nudged into making a purchase because “Only 2 left in stock!” popped up? AI-driven persuasion tactics like these play on our emotions—urgency, fear of missing out (FOMO), and excitement—to get us to act in ways we might not have otherwise.5. AI in Political Campaigns

AI-powered analytics help political campaigns craft highly targeted messages designed to resonate with voters emotionally. While this can be seen as smart campaigning, it can also manipulate public opinion by pushing exaggerated or biased narratives.

⚖️ Where Do We Draw The Ethical Line?

So, is all this manipulation bad? Not necessarily. After all, humans manipulate each other all the time. Parents use emotional appeals to teach kids right from wrong, teachers use motivation tactics to inspire students, and advertisers use storytelling to draw us into a product’s world. But with AI, the scale and precision of manipulation raise some significant concerns.1. Informed Consent

One major ethical issue is that people often don’t realize they’re being manipulated. AI operates behind the scenes, using data we might not even be aware it has. Should AI companies be required to be more transparent about how their algorithms influence us?2. Autonomy & Free Will

If AI can predict and shape our emotions with extreme accuracy, are we still making our own choices? Or are we just puppets, with AI pulling the emotional strings? That’s a tough question, and it challenges our very sense of free will.3. The Risk of Emotional Exploitation

When AI is programmed to push certain buttons—like making us feel sad to sell us a product—it enters dangerous territory. Emotional exploitation can lead to depression, anxiety, or even financial consequences when people are manipulated into overspending.4. Regulation & Ethical AI Development

Governments and tech companies are beginning to discuss regulations to prevent AI systems from unethical emotional exploitation. But regulation is tricky. If AI creators don’t police themselves, should governments step in? And if so, how do you regulate something as complex as AI-driven emotions?

🚀 The Future: Could AI Develop Emotional Intelligence?

Right now, AI doesn’t experience emotions—it just mimics them. But what happens if we create AI that genuinely understands and processes emotions? Would that make AI even more dangerous when it comes to manipulation?Some researchers are working on AI that can detect human emotions through facial recognition, voice tone, and even physiological signals like heart rate. If this technology improves, AI could become even better at manipulating emotions—potentially in ways we can’t even anticipate yet.

Will AI eventually care about how we feel? Or will it just get better at pretending?

🧐 How Can We Protect Ourselves From AI Manipulation?

If AI is becoming a master manipulator, how can we fight back? Here are a few things you can do:- Be mindful of your emotions online – If a piece of content makes you feel a strong emotional reaction (anger, fear, extreme happiness), ask yourself: Am I reacting naturally, or am I being nudged?

- Question personalized recommendations – Whether it's a YouTube playlist, an Amazon suggestion, or a Netflix show, remember that AI is designed to keep you engaged—not necessarily to serve your best interests.

- Limit your data exposure – The less data companies have on you, the less power AI has to manipulate you. Consider using privacy-focused tools, limiting social media sharing, and tweaking your ad preferences.

- Support ethical AI development – Push for transparency and ethical AI policies by supporting organizations that advocate for responsible AI use and data privacy.

🏁 Final Thoughts

AI is an incredible tool, but it’s also a double-edged sword. While it can enhance our lives in countless ways, it also has the potential to manipulate us emotionally in ways we barely notice. As AI continues to evolve, the line between persuasion and manipulation will become even blurrier. That’s why it’s crucial to stay informed and critically evaluate how AI influences our decisions.At the end of the day, AI isn’t inherently good or evil—it’s how we choose to use (or regulate) it that will determine its impact on our emotions and society. So next time you find yourself binge-watching, shopping impulsively, or feeling a certain way after an interaction with AI, ask yourself: *Is this really me, or is it the AI talking?

all images in this post were generated using AI tools

Category:

Ai EthicsAuthor:

Ugo Coleman

Discussion

rate this article

4 comments

Rhea McLean

This article raises crucial questions about AI's role in emotional manipulation. While technology can enhance our experiences, we must remain vigilant about its potential to exploit vulnerabilities and prioritize ethical considerations in AI development.

June 8, 2025 at 5:01 AM

Ugo Coleman

Thank you for your insights! It's essential to critically examine the ethical implications of AI in emotional manipulation to ensure technology serves humanity positively.

Spencer Oliver

AI's potential for emotional manipulation raises profound ethical concerns we must address.

June 1, 2025 at 11:33 AM

Ugo Coleman

I agree; the ethical implications of AI's emotional manipulation are critical and warrant careful scrutiny to ensure technology serves humanity positively.

Zevonis Good

Thought-provoking article! Ethics in AI is crucial now.

May 25, 2025 at 11:45 AM

Ugo Coleman

Thank you! I'm glad you found it thought-provoking. Ethics in AI is indeed essential as we navigate these complex issues.

Haven Harper

This article raises crucial questions about AI's role in emotional manipulation. As technology evolves, we must prioritize ethical guidelines to ensure that AI enhances human interaction rather than exploits vulnerabilities. Responsible innovation is key to our relationship with AI.

May 23, 2025 at 2:52 AM

Ugo Coleman

Thank you for your insightful comment! I completely agree that prioritizing ethical guidelines is essential as we navigate the complexities of AI in emotional contexts. Responsible innovation is indeed vital for fostering positive human-AI interactions.